Overview of our work.

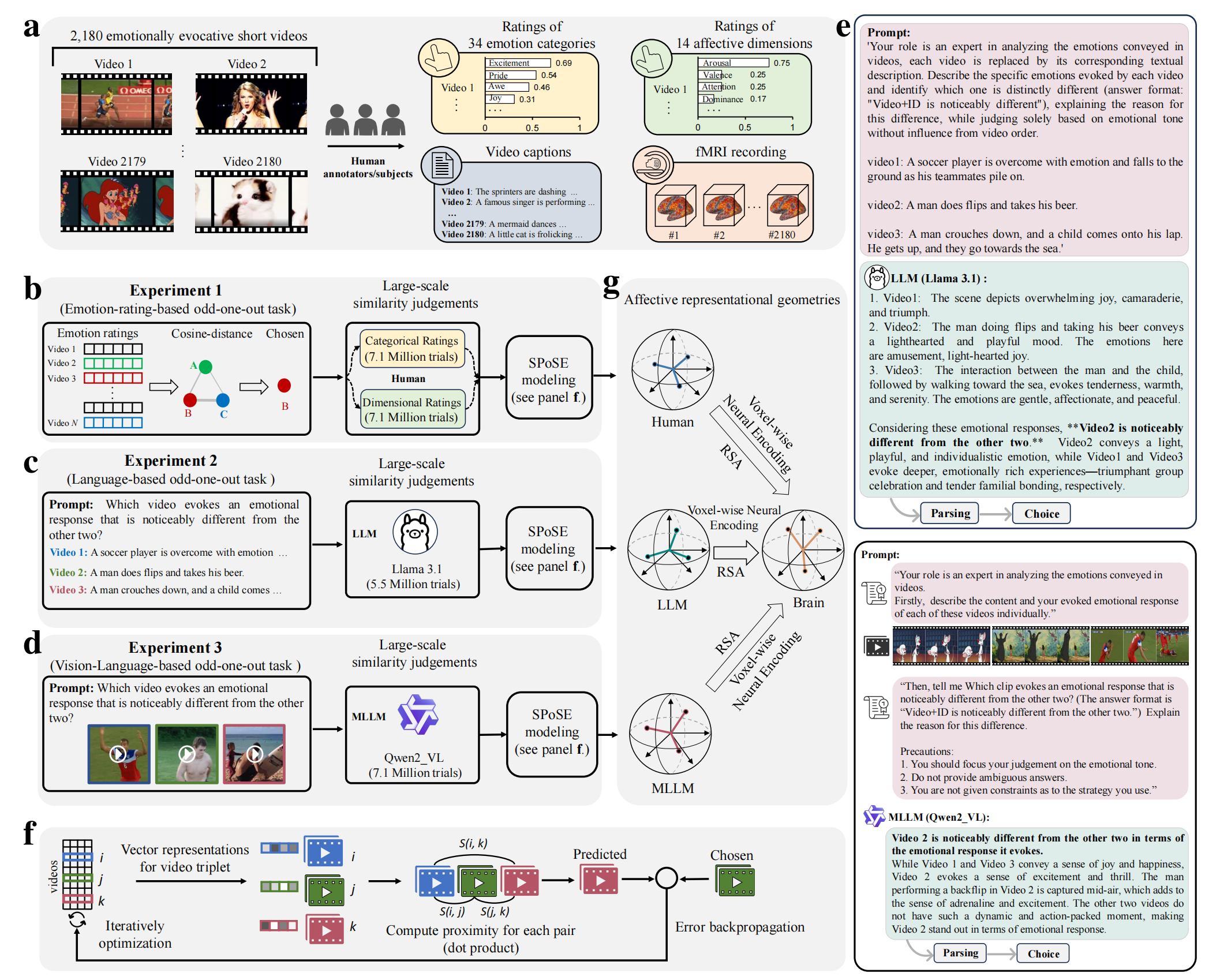

Overview of the experimental and analytical pipeline.

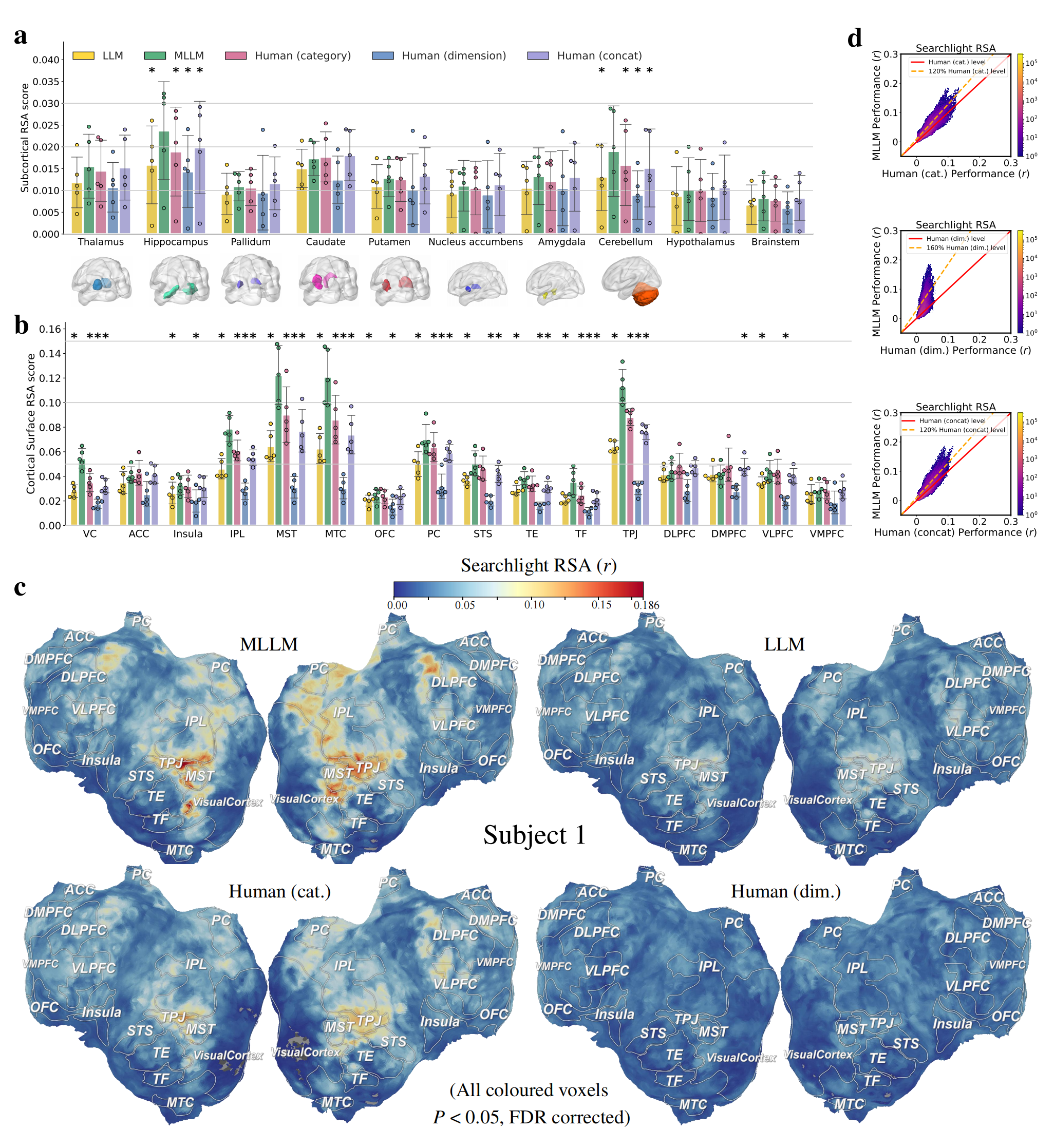

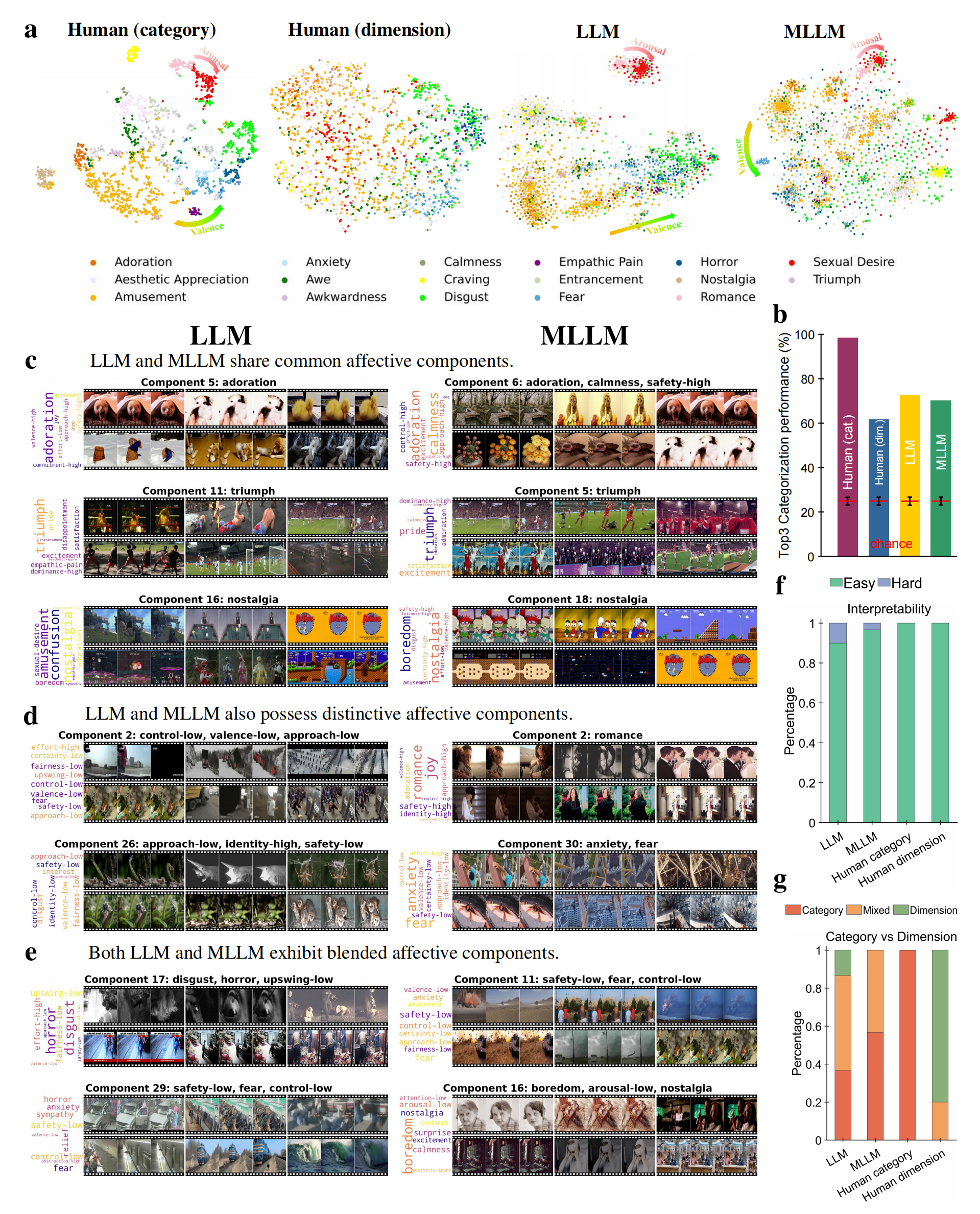

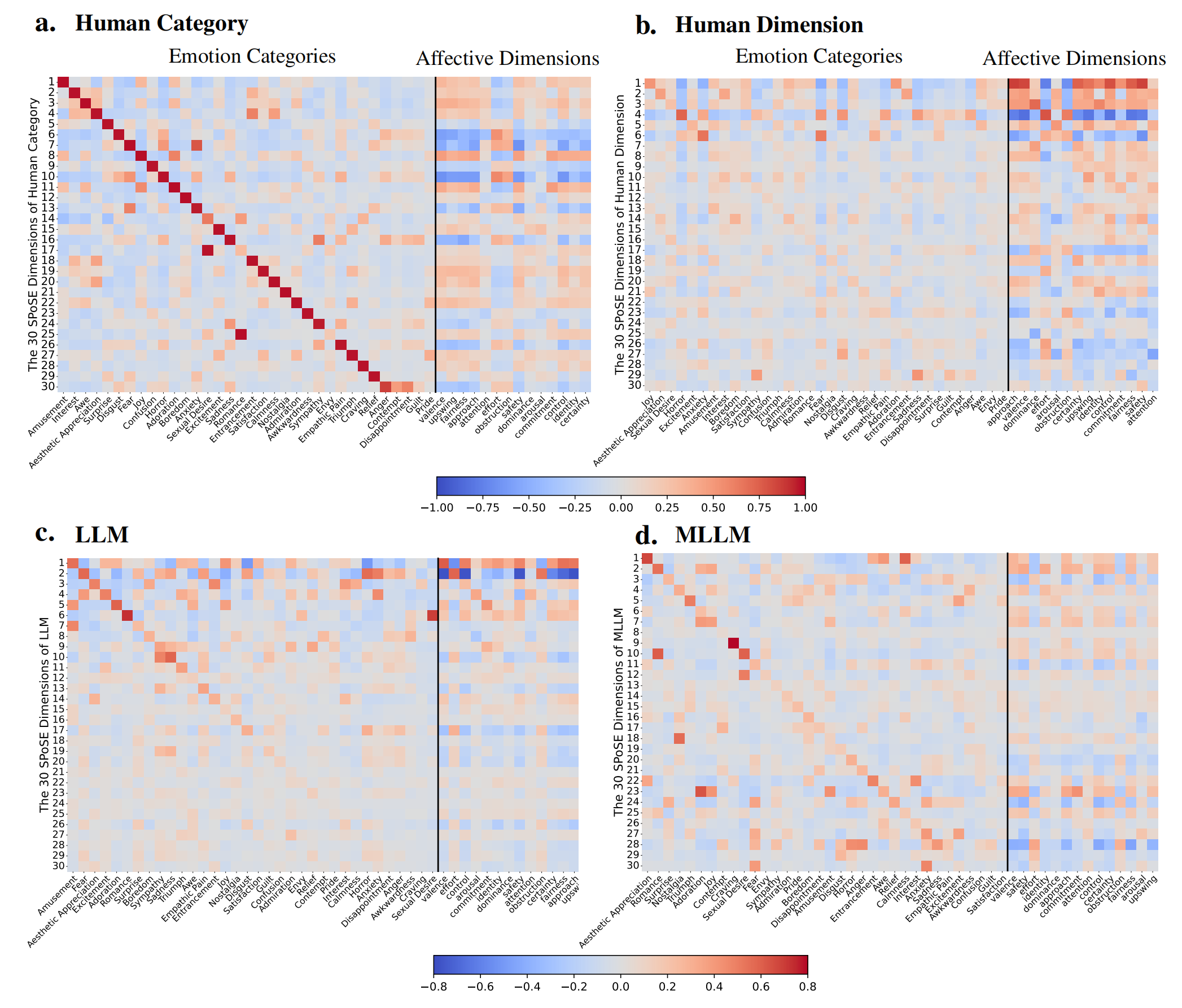

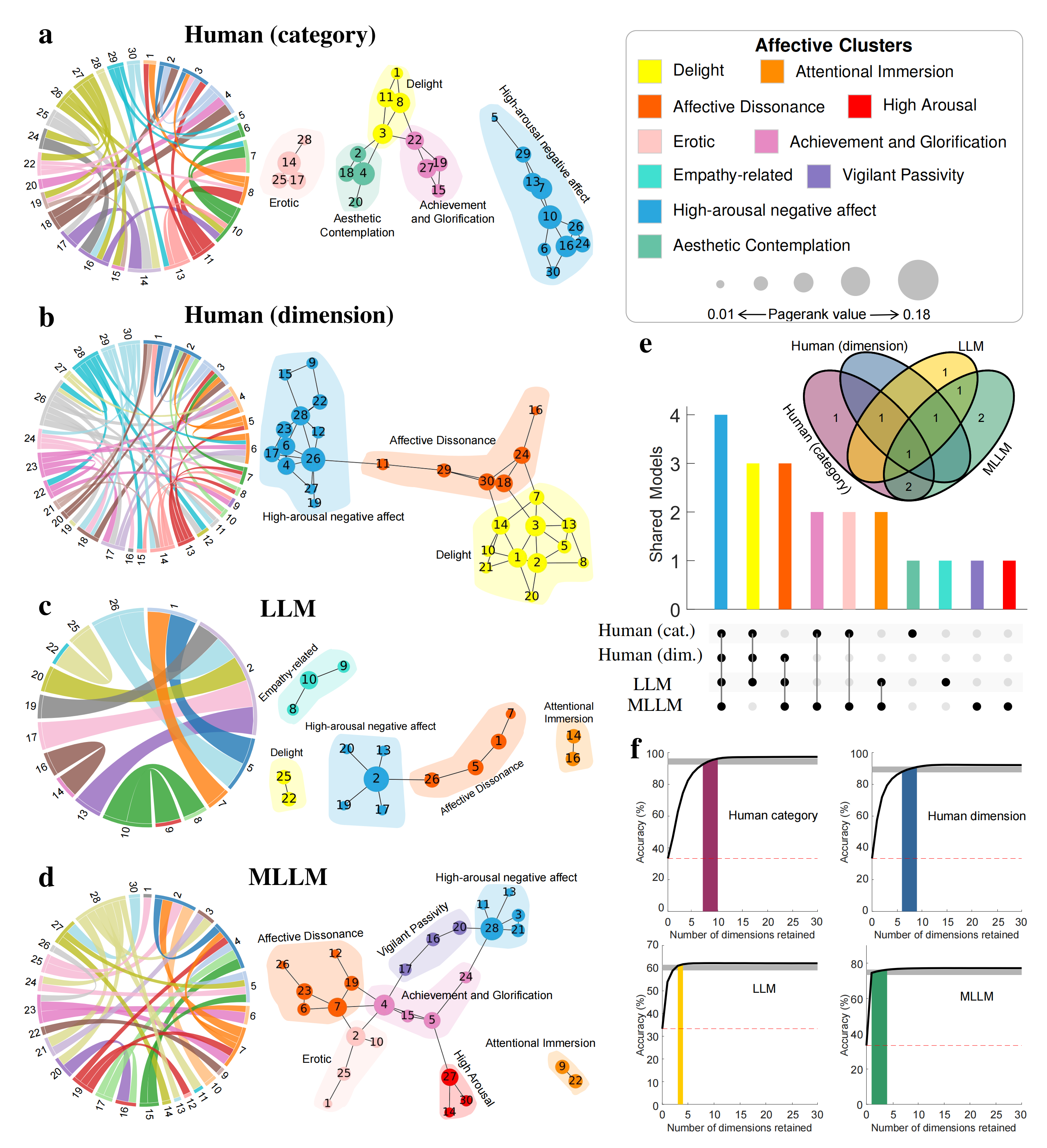

a, The study utilized a database of 2,180 emotionally evocative videos with rich, pre-existing annotations, including human ratings on discrete emotion categories and continuous affective dimensions, detailed textual descriptions, and corresponding fMRI data from viewers. b-d, Affective embeddings were derived for four systems—human categorical ratings, human dimensional ratings, LLM, and MLLM—using a triplet odd-one-out behavioral paradigm. Human similarity judgments were simulated based on the cosine similarity of their prior ratings, while models performed the task directly. e, Example prompts and responses for the LLM and MLLM. f-g, Latent embeddings were learned from over 7.1 million triplet judgments using SPoSE (f), and the resulting representational spaces were compared to each other and to neural data (g).